Show Joint Probability of Markov Chain Is Exponential Family

A continuous-time Markov chain (CTMC) is a continuous stochastic process in which, for each state, the process will alter state according to an exponential random variable and then motility to a different land as specified by the probabilities of a stochastic matrix. An equivalent formulation describes the process as changing state according to the to the lowest degree value of a set of exponential random variables, one for each possible state it tin can movement to, with the parameters determined by the electric current state.

An example of a CTMC with three states is as follows: the process makes a transition later the corporeality of time specified by the holding time—an exponential random variable , where i is its electric current land. Each random variable is independent and such that , and . When a transition is to be made, the process moves according to the jump chain, a discrete-time Markov chain with stochastic matrix:

Equivalently, past the theory of competing exponentials, this CTMC changes state from state i according to the minimum of ii random variables, which are independent and such that for where the parameters are given past the Q-matrix

Each non-diagonal value can be computed equally the production of the original state's property time with the probability from the jump concatenation of moving to the given state. The diagonal values are chosen so that each row sums to 0.

A CTMC satisfies the Markov holding, that its behavior depends only on its current country and non on its past beliefs, due to the memorylessness of the exponential distribution and of discrete-time Markov chains.

Definition [edit]

A continuous-fourth dimension Markov chain (X t ) t ≥ 0 is defined by:[1]

For i ≠j, the elements q ij are non-negative and describe the rate of the procedure transitions from state i to state j. The elements q ii could exist chosen to be zero, just for mathematical convenience a mutual convention is to cull them such that each row of sums to cipher, that is:

Note how this differs from the definition of transition matrix for discrete Markov bondage, where the row sums are all equal to one.

There are three other definitions of the procedure, equivalent to the one above.[2]

Transition probability definition [edit]

Some other common manner to ascertain continuous-fourth dimension Markov bondage is to, instead of the transition rate matrix , utilize the following:[one]

Naturally, must exist naught for all .

The values and are closely related to the transition rate matrix , past the formulas:

Consider an ordered sequence of time instants

- [ dubious ]

where the p ij is the solution of the forward equation (a starting time-club differential equation):

with initial condition P(0) being the identity matrix.

Infinitesimal definition [edit]

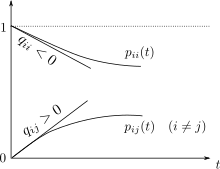

The continuous time Markov chain is characterized by the transition rates, the derivatives with respect to time of the transition probabilities between states i and j.

Permit be the random variable describing the state of the process at time t, and presume the process is in a land i at time t. Past definition of the continuous-time Markoff chain, is contained of values prior to instant ; that is, it is independent of . With that in mind, for all , for all and for small values of , the following holds:

- ,

where is the Kronecker delta and the lilliputian-o notation has been employed.

The to a higher place equation shows that can be seen equally measuring how quickly the transition from to happens for , and how quickly the transition abroad from happens for .

Leap chain/holding time definition [edit]

Define a discrete-time Markov chain Y northward to depict the due northth jump of the process and variables S 1, S two, S 3, ... to describe holding times in each of the states where S i follows the exponential distribution with rate parameter −q Y i Y i .

Properties [edit]

Communicating classes [edit]

Communicating classes, transience, recurrence and positive and nothing recurrence are divers identically as for detached-time Markov bondage.

Transient behaviour [edit]

Write P(t) for the matrix with entries p ij = P(Ten t =j |X 0 =i). Then the matrix P(t) satisfies the forward equation, a first-order differential equation

where the prime denotes differentiation with respect to t. The solution to this equation is given by a matrix exponential

In a simple instance such every bit a CTMC on the state space {i,2}. The general Q matrix for such a process is the following 2 × ii matrix with α,β > 0

The to a higher place relation for forward matrix can be solved explicitly in this case to give

Yet, straight solutions are complicated to compute for larger matrices. The fact that Q is the generator for a semigroup of matrices

is used.

Stationary distribution [edit]

The stationary distribution for an irreducible recurrent CTMC is the probability distribution to which the procedure converges for large values of t. Find that for the two-state process considered earlier with P(t) given by

every bit t → ∞ the distribution tends to

Find that each row has the same distribution as this does not depend on starting land. The row vector π may be institute past solving[three]

with the additional constraint that

Example ane [edit]

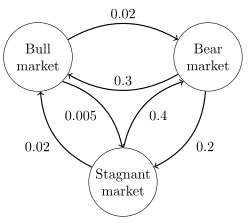

Directed graph representation of a continuous-time Markov chain describing the state of financial markets (notation: numbers are made-up).

The image to the correct describes a continuous-time Markov chain with state-space {Bull marketplace, Behave market place, Stagnant market} and transition rate matrix

The stationary distribution of this chain can be found by solving , subject to the constraint that elements must sum to one to obtain

Case 2 [edit]

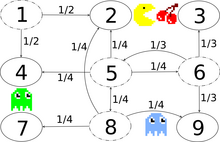

Transition graph with transition probabilities, exemplary for the states ane, 5, 6 and 8. At that place is a bidirectional secret passage between states 2 and 8.

The image to the correct describes a discrete-time Markov chain modeling Pac-Man with state-infinite {ane,2,3,4,5,6,seven,8,9}. The histrion controls Pac-Man through a maze, eating pac-dots. Meanwhile, he is being hunted by ghosts. For convenience, the maze shall be a small 3x3-filigree and the monsters movement randomly in horizontal and vertical directions. A clandestine passageway between states 2 and 8 can be used in both directions. Entries with probability zero are removed in the following transition charge per unit matrix:

This Markov concatenation is irreducible, because the ghosts can fly from every country to every country in a finite amount of time. Due to the undercover passageway, the Markov chain is also aperiodic, because the monsters can move from any country to any state both in an fifty-fifty and in an uneven number of country transitions. Therefore, a unique stationary distribution exists and can be found by solving , subject to the constraint that elements must sum to 1. The solution of this linear equation subject to the constraint is The central state and the border states two and viii of the next underground passageway are visited almost and the corner states are visited least.

Fourth dimension reversal [edit]

For a CTMC 10 t , the time-reversed procedure is defined to be . By Kelly's lemma this procedure has the same stationary distribution every bit the forrad process.

A chain is said to be reversible if the reversed process is the aforementioned as the forrard procedure. Kolmogorov's criterion states that the necessary and sufficient condition for a procedure to exist reversible is that the product of transition rates around a closed loop must be the aforementioned in both directions.

Embedded Markov chain [edit]

One method of finding the stationary probability distribution, π, of an ergodic continuous-time Markov concatenation, Q, is past showtime finding its embedded Markov concatenation (EMC). Strictly speaking, the EMC is a regular detached-time Markov chain, sometimes referred to as a jump process. Each element of the one-footstep transition probability matrix of the EMC, Due south, is denoted by southward ij , and represents the conditional probability of transitioning from state i into state j. These conditional probabilities may be found past

From this, Due south may exist written as

where I is the identity matrix and diag(Q) is the diagonal matrix formed by selecting the main diagonal from the matrix Q and setting all other elements to cipher.

To discover the stationary probability distribution vector, we must next observe such that

with being a row vector, such that all elements in are greater than 0 and = 1. From this, π may be found as

(S may exist periodic, even if Q is not. Once π is constitute, it must be normalized to a unit vector.)

Another discrete-time process that may exist derived from a continuous-time Markov chain is a δ-skeleton—the (detached-time) Markov chain formed past observing 10(t) at intervals of δ units of time. The random variables X(0),X(δ),X(2δ), ... give the sequence of states visited by the δ-skeleton.

See also [edit]

- Kolmogorov equations (Markov spring process)

Notes [edit]

- ^ a b Ross, South.Yard. (2010). Introduction to Probability Models (ten ed.). Elsevier. ISBN978-0-12-375686-two.

- ^ Norris, J. R. (1997). "Continuous-time Markov bondage I". Markov Chains. pp. 60–107. doi:10.1017/CBO9780511810633.004. ISBN9780511810633.

- ^ Norris, J. R. (1997). "Continuous-time Markov chains II". Markov Chains. pp. 108–127. doi:10.1017/CBO9780511810633.005. ISBN9780511810633.

References [edit]

- A. A. Markov (1971). "Extension of the limit theorems of probability theory to a sum of variables connected in a chain". reprinted in Appendix B of: R. Howard. Dynamic Probabilistic Systems, book i: Markov Bondage. John Wiley and Sons.

- Markov, A. A. (2006). Translated by Link, David. "An Example of Statistical Investigation of the Text Eugene Onegin Concerning the Connection of Samples in Bondage". Science in Context. xix (4): 591–600. doi:10.1017/s0269889706001074.

- Leo Breiman (1992) [1968] Probability. Original edition published past Addison-Wesley; reprinted by Society for Industrial and Applied Mathematics ISBN 0-89871-296-iii. (Encounter Chapter 7)

- J. L. Doob (1953) Stochastic Processes. New York: John Wiley and Sons ISBN 0-471-52369-0.

- S. P. Meyn and R. L. Tweedie (1993) Markov Chains and Stochastic Stability. London: Springer-Verlag ISBN 0-387-19832-vi. online: MCSS . Second edition to appear, Cambridge Academy Press, 2009.

- Kemeny, John G.; Hazleton Mirkil; J. Laurie Snell; Gerald Fifty. Thompson (1959). Finite Mathematical Structures (1st ed.). Englewood Cliffs, NJ: Prentice-Hall, Inc. Library of Congress Carte Itemize Number 59-12841. Classical text. cf Chapter vi Finite Markov Chains pp. 384ff.

- John Yard. Kemeny & J. Laurie Snell (1960) Finite Markov Chains, D. van Nostrand Company ISBN 0-442-04328-7

- E. Nummelin. "Full general irreducible Markov chains and non-negative operators". Cambridge University Press, 1984, 2004. ISBN 0-521-60494-10

- Seneta, E. Not-negative matrices and Markov bondage. second rev. ed., 1981, 16, 288 p., Softcover Springer Series in Statistics. (Originally published past Allen & Unwin Ltd., London, 1973) ISBN 978-0-387-29765-i

whiteleggehopearabits.blogspot.com

Source: https://en.wikipedia.org/wiki/Continuous-time_Markov_chain

0 Response to "Show Joint Probability of Markov Chain Is Exponential Family"

Post a Comment